Just for giggles, following up on my pondering regarding the SQLite schema within NetNewsWire, I poked around in the DB and pulled the schemas:

Thinking out loud. With a nice library around the Feedbin API it wouldn’t be too hard to grab the data and stuff it into SQLite. Alternatively, a Feedbin account could be registered with NetNewsWire and then the underlying SQLite DB inspected.

The former seems more elegant while the latter is radically pragmatic.

If for nothing else, poking around in the NetNewsWire SQLite DB probably illustrates a highly performant SQL data model and schemas for RSS data and feed management. Or even better, just read the source code

Link parkin’, since I have a largish collection of largish csv files I’m interested in processing: zsv

Preliminary performance results compare favorably vs other CSV utilities (xsv, tsv-utils, csvkit, mlr (miller) etc). Below were results on a pre-M1 macOS MBA; on most platforms zsvlib was 2x faster, though in some cases the advantage was smaller e.g. 15-25%)

Link parkin’. A couple of publicly available modules for taking personal data and stuffing it into an SQLite database, congruent with Simon Willison’s Dogsheep initiative

Dogsheep is a collection of tools for personal analytics using SQLite and Datasette.

I’ve been working on automating metadata additions to my Fabric

collection using information from Discogs. I was poking around for cli

ways, especially via ffmpeg, to add the info to

a music file and chanced across a really useful gist.

A quick guide on how to read/write/modify ID3 metadata tags for audio / media files using ffmpeg.

At the bottom of the gist is a mention of ffprobe. Much more

appropriate for the task at hand, especially since it can generate

output in JSON

ffprobe gathers information from multimedia streams and prints it in human- and machine-readable fashion.

… ffprobe output is designed to be easily parsable by a textual filter, and consists of one or more sections of a form defined by the selected writer, which is specified by the print_format option.

Although to be fair, ffprobe doesn’t seem to be able to write metadata

to a file.

Bonus! ffprobe tips

Don’t know why, but I was reminded that Simon Willison had a neat

utility to process data exported from Apple HealthKit data,

specifically from an Apple Watch, healthkit-to-sqlite

Convert an Apple Healthkit export zip to a SQLite database

Includes export instructions from your watch. Worked surprisingly well.

The neat part is that you can then use Datasette and the Datasette cluster map plugin to visualize outdoor workouts on a map. And of course there’s always good old, exploratory data analysis using sqlite and Pandas.

I was dorking around last night and came up with the following in

about 15 minutes of work in xonsh command line session.

from pathlib import Path

for fname in !(ls *.wav):

p = Path(fname.strip())

ffmpeg -i @(p) -c:a libfdk_aac -b:a 128k @(f"{p.stem}.m4a")

🎉 💥 💥 🎉

At the day job, I got sucked into trying to understand two PostgreSQL

data types, timestamp and timestamptz. Thought I knew what I was

doing, then read the docs and came away even more confused. Luckily,

the folks at Cybertec had a pretty recent blog post on just this topic

Time Zone Management in PostgreSQL.

Next to character encoding, time zones are among the least-loved topics in computing. In addition, PostgreSQL’s implementation of timestamp with time zone is somewhat surprising. So I thought it might be worth to write up an introduction to time zone management and recommendations for its practical use.

The punchline …

Even though it is easy to get confused with time zones, you can steer clear of most problems if you use timestamp with timezone everywhere, stick with IANA time zone names and make sure to set the TimeZone parameter to the time zone on the client side. Then PostgreSQL will do all the heavy lifting for you.

But really, read the whole thing. There’s a lot of nuance and the proper handling of timezones in Postgres is definitely not obvious. I may actually circle back and illustrate what dragged me into this tarpit and how I currently understand things.

I think I discovered their blog during my last writing hiatus, so time to give ’em some love. The fine folks at fly.io have been doing excellent technical blogging for a few years now. Thomas Ptacek’s stuff, like this one on process isolation, is the pinnacle but there’s all around good material from many quarters. For example, here are some quality posts on Firecracker

It’s a small start, but my musicapp command line utility, for

working with XML out of Apple’s Music.app, can be used in a one liner with sqlite-utils:

Link parkin’: pg_timetable

pg_timetable is an advanced job scheduler for PostgreSQL, offering many advantages over traditional schedulers such as cron and others. It is completely database driven and provides a couple of advanced concepts.

In any serious development effort, I’m likely to have PostgreSQL in the stack so might as well take advantage of it for scheduled tasks too. One less piece of kit to worry about.

Plus: Pavlo Golub’s series of blog posts on pg_timetable. Pavlo is the creator of pg_timetable. Part of CYBERTEC’s PostgreSQL Professional Services.

Link parkin’: sqlite-utils.

CLI tool and Python utility functions for manipulating SQLite databases

This library and command-line utility helps create SQLite databases from an existing collection of data.

Can’t believe I haven’t stashed Simon Willison’s insanely useful toolkit on this here blog. Makes it insanely easy to do stuff with sqlite databases from the command line and from within Python. For example

If you have data as JSON, you can use

sqlite-utils insert tablenameto insert it into a database. The table will be created with the correct (automatically detected) columns if it does not already exist.

PostgreSQL is totally awesome. But sometimes it’s more useful to have pure file(s) storage and query for your data. Herewith a collection of data storage engines that somewhat cover the space of more well-known engines:

Previously I mentioned libpytunes and went to kick the tires. I

thought it was published on PyPI but turns out it wasn’t. So here

I am going pip install libpytunes and wondering why I can’t

subsequently do a import libpytunes.

I’ve always known you can do pip install from a git repository, but

a while back Adam Johnson wrote up some of the details. There are

plenty of other good overviews out there, (e.g. Simon Willison’s),

this one just caught my eye recently.

Now pip install git+https://github.com/liamks/libpytunes actually

installs the module and my import statement works as expected. Bonus, you

can put git+https://github.com/liamks/libpytunes into

requirements.txt and setup.py files as well, to achieve similar results.

Unfortunately the liamks version got hit by a trivial API change in

plistlib in Python 3.9, so there was still breakage on my end, but

Anirudh Acharya has a forked repo with the necessary one

liner fix. Of course I used pip install git..., and now my Music.app

experiments are proceeding apace.

Link parkin’: Music Library Exporter

Music Library Exporter allows you to export your library and playlists from the native macOS Music app.

The library is exported in an XML format, and is compatible with other applications, services, and tools that rely on the Music (previously iTunes) XML library format.

🎉 🎊 🥳 BONUS!! CLI SUPPORT 🥳 🎊 🎉

Aside from the main Music Library Exporter application, this project also includes a command-line program called library-generator.

Now licking my chops for some serious Music.app automation, although I’m a little nervous about compatibility. Will give it a test drive and report back.

Can’t believe I’ve never posted about GH Archive

Open-source developers all over the world are working on millions of projects: writing code & documentation, fixing & submitting bugs, and so forth. GH Archive is a project to record the public GitHub timeline, archive it, and make it easily accessible for further analysis.

There’s a solid 10+ years of freely available GitHub spewed JSON to practice data spelunking, system benchmarking, and query hacking against.

Update: The CNCF DevStats project puts that data to interesting use through application of actual CNCF projects. So meta!

This is a toolset to visualize GitHub archives using HA Postgres databases and Grafana dashboards. Everything is open source so that it can be used by other CNCF and non-CNCF open source projects. The only requirement is that project must be hosted on a public GitHub repository/repositories. Project is deployed using Equinix bare metal Kubernetes nodes and deployed using a Helm chart. It uses many more CNCF projects under the hood.

At least Google couldn’t find any such post on my site. Maybe that’s a hint to implement some real local search. 🤣

Link parkin’: PyOPML

Welcome! This documentation is about PyOPML, a Python package meant to read, manipulate and write OPML 2.0 files.

Stashed in light of previously mentioning the OPML that the Overcast app generates.

I’ve been looking for a way to jump start my blog post authoring process via text expansion or templating within Emacs. Just haven’t had the time to go digging. Luckily a potential solution came to me: YASnippet

YASnippet is a template system for Emacs. It allows you to type an abbreviation and automatically expand it into function templates. … The snippet syntax is inspired from TextMate’s syntax, you can even import most TextMate templates to YASnippet.

Source code and info from EmacsWiki.

YouTube demo embed after this break …

Even though I’ve expounded on the coolness of xonsh, I haven’t put it to use in full anger yet. I’m thinking it might best be leveraged as an interactive shell workspace, sort of like Jupyter Notebooks but without the Web browser bits and much more of a CLI.

But I’ve been thinking about how to make launching a new space as cheap and mindless as possible. Enter scripting the iTerm2 terminal emulator using its Python API. From an example of scripting iTerm2 straight from another command line:

This script demonstrates two concepts:

Launching iTerm2 using PyObjC and running the script only after it is launched.

Creating a window that runs a command.

So if the command is “kickoff xonsh with some args,” either in an existing or newly created virtualenv, it becomes almost trivial to fire off new interactive spaces.

Just had to acknowledge Simon Willison’s 20th anniversary of blogging. I have a very loose tie in that I used to have an appointment at the Medill School of Journalism. At the time, the concept of Content Management Systems wasn’t ingrained in media circles. Working with a colleague, we introduced a rudimentary platform that was used as part of every MS student’s stint in Medill’s downtown Chicago newsroom.

So of course when I first heard of Django and how it was born in a newsroom, I had to have Adrian Holovaty come up to Evanston and give a talk. For a while, Adrian and I lived relatively close by in Chicago and would bump into each other at ChiPy meetups. Thus the extremely loose tie to Simon.

I really enjoy and admire Simon’s current stream of work, especially on Datasette. Despite the volume, his blog is one of a handful that I look forward to with anticipation for new content. That guy can crank out some code, but also has good taste in problems, and will go down a few layers into the technology. Here’s to many more posts to come!

I wanted to start liberating my OS X Music.app data, noticing that you can “Export… > iTunes Libray” to spit out XML to the file system. Next stop was parsing the XML. Hang on, there’s gotta already be a Python module(s) for that right?

itunesLibrary was the first port of call. It inhaled my 18 MB of XML library file, but the object interface didn’t click with me. It was sort of Pythonic dictionary like, but not quite.

Turns out the exported XML is just an Apple property list (plist) file and there’s a plist parsing library in the Python standard library. libpytunes is a thin wrapper around plistlib. I need to give it a longer test drive, but it seems a bit more complete than itunesLibrary.

Beyond intermittently doing a manual slog through menus, I was hoping there might be a way to automate this via Apple’s scripting machinery. Doug’s Applescripts is the go to in this space, especially for things related to the OS X Music app. Apparently, Doug is not sanguine on leveraging the XML format or Apple’s replacement:

So anyway, the XML has finally gone away, effectively, since it is no longer automatically exported.

I’ve been trying to incorporate the ITLibrary framework into my projects whenever I can, especially for apps that need to display lots of tracks or playlists (like Media Folder Files Not Added).

But ITLibrary was apparently last updated for macOS 10.13. And now that iTunes has been split out into the media apps, it’s usefulness over the XML file has not been improved.

(And please. Don’t let me hear anyone suggest some groovy way of exporting the XML automatically. Forget about the XML. Unless it’s for backups or something.)

And mind, this was in October 2019. I suspect much hasn’t changed since.

But for me, the XML is enough in the short term. If I ever get working daily snapshots I’ll be happy. The real fun will begin when transmogrifying the data into something ingestable into an SQL engine and thence marrying with Discogs Data.

Apropos Elle Driver, “You know I’ve always liked that word … ’sanguine’ … so rarely have an opportunity to use it in a sentence.”

Link parkin’: LF AI & Data Foundation.

The LF AI & Data Foundation supports open source projects within artificial intelligence and the data space.

This overview deck provides a lot more detail.

Didn’t realize that the Linux Foundation had an Artificial Intelligence and Data thrust, with a bushel of projects under its umbrella. A few of them are having a pretty big industry impact.

Discovered this via poking around at the Milvus repository:

Milvus is an open-source vector database built to power embedding similarity search and AI applications. Milvus makes unstructured data search more accessible, and provides a consistent user experience regardless of the deployment environment.

Milvus 2.0 is a cloud-native vector database with storage and computation separated by design. All components in this refactored version of Milvus are stateless to enhance elasticity and flexibility. For more architecture details, see Milvus Architecture Overview.

This looks like an interesting set of presentations: “Have You Tried Rubbing A Database On It?”

HYTRADBOI was a day of lightning talks about turning a data-centric lens onto familiar problems to yield strange new solutions (and maybe exciting new problems). Talks ranged from wild ideas and unlikely experiments to cutting-edge research and production war stories.

Being loosely affiliated with the Berkeley Computer Systems Mafia (TM) the general theme of “let’s talk intersection of data management and everything else,” is near and dear to my heart. Gotta give a shout out to CIDR as an analog, but strictly from the academic R&D perspective. And definitely without the radical format experiment!

Really useful background information and conference postmortem

Background

Fundamentally, every computer system is about storing, moving and transforming data. The line between operating system, database and programming language is somewhat arbitrary - a product of specific problems, available hardware and historical accident.

But today the problems and the hardware have changed dramatically, and as a result we’re starting to see people experimenting with redrawing the lines.

Postmortem

A lot of people expressed a surprising amount of support for the conference. I think there is a lot of pent up demand for a database conference that isn’t just SaaS ads. That support meant that people were very willing to help promote the conference and were forgiving of the many technical issues. Many people bought tickets knowing that they wouldn’t be able to attend, because they wanted something like this to exist.

Link parkin’, Feedbin API Documentation

This is the official documentation for the Feedbin REST-style API.

Does what it says on the tin. Guess who has a few years worth of starred items to retrieve?

P. S. Can’t recommend Feedbin enough as a feed aggregator and reader. Maybe it’s selective memory, but feels just as good as the late lamented Google Reader, even though I don’t use the Web frontend much. That Feedbin has a solid client ecosystem, of which two apps I use daily, speaks well of the business. I pay for it and my subscription is on auto renewal. Rock solid and no drama.

Now let’s see if I can’t take advantage of that API.

So I’ve been struggling for the longest time with my personal dotfiles repository trying to share it between personal and work activities. Two separate branches seemed to eliminate some of the difficulties, but SSH access was quite frustrating. It was challenging dealing with multiple public keys, across multiple machines, one of which typically was used infrequently. Suffice it to say I banged my head against a lot of SSH and git config file walls.

Then I just gave up and decided to push the work branch to a work GitHub account and deal with cross pollination via merges over HTTP. Part of the problem was attempting to keep the personal repo private for no good reason. I really thought at some point I’d be adding public keys into the repository just to make life easier and became totally paranoid. YAGNI.

The final piece of the puzzle was sending collaboration invitations between the two accounts, thereby providing push access to both repos with one SSH key for each persona. 🤦 C’mon man!

Now it’s fairly straightforward to share changes between the two configurations. I’d dare say I could even go back to making the personal repo private, but what’s the point.

Link parkin’, pyLast

A Python interface to Last.fm and other API-compatible websites such as Libre.fm.

Will come in handy for downloading a years’ worth of scrobbles from my account and conducting some analysis.

First off, I really need to generate some basic

discriminative descriptive statistics across all of this

Discogs data. Pick off the low hanging fruit.

Having said that, since I’ve got the data in a PostgreSQL instance, including my own custom views, some kind of HTTP based API to access the data would provide an interesting prototype.

Enter PostgREST

PostgREST is a standalone web server that turns your PostgreSQL database directly into a RESTful API. The structural constraints and permissions in the database determine the API endpoints and operations.

What would oEmbed cards look like for pure textual playlists?

This sat in the drafts folder for a bit, but I finally decided to just hit publish and stop seeking perfection.

Well that was a mildly annoying adventure discovering how to get code syntax highlighting working. Pelican’s markdown support includes CodeHilite by default but I couldn’t figure out how to actually trigger. Turns out once I installed the Pygments module, things kicked in.

Anyway, who knew the language of the first code segment to appear on this blog would be SQL? Using some handy regular expression features of Postgres, I layered some views on top of data imported from discogs-xml2db. The target was getting an extraction of releases from the Fabric and FabricLive series. Still a fair amount of data normalization needed to be done, but at least I’ve got 100% recall with not too much extra stuff and that’s only because the titles aren’t quite consistent. Ultimately had to resort to explicitly black listing some rows

Code and example output below the fold

So I took the discogs-xml2db tool and ran it against the

Discogs Data, May 2022 release. I got back 8.1 Gb, 😱, of csv data to

ingest into PostgreSQL. I’ve done it for previous months and it’s

ingested just fine, but there’s some interesting exploration that can

be done with the csv data, before, and after ingest. But I’m gonna

need a few tools:

- VisiData, “VisiData is an interactive multitool for tabular data.”

- xsv, “xsv is a command line program for indexing, slicing, analyzing, splitting and joining CSV files. “

- Data Fluent for PostgreSQL, “Build a better understanding of your data in PostgreSQL.”

Some might call me an Emacs tramp (🤣), but I’m referring to the Emacs mode that allows for editing remote files over ssh connections.

TRAMP (Transparent Remote Access, Multiple Protocols) is a package for editing remote files, similar to AngeFtp or efs. Whereas the others use FTP to connect to the remote host and to transfer the files, TRAMP uses a remote shell connection (rlogin, telnet, ssh). It can transfer the files using rcp or a similar program, or it can encode the file contents (using uuencode or base64) and transfer them right through the shell connection.

The overall experience is pretty much seamless, while not having to deal with a bunch of warts related to distributed file systems such as SMB, AFS, Dropbox, et. al. Works great even with version control tools such as magit.

The fact that TRAMP can operate over telnet (😱) of all protocols should tell you it’s ancient though. An elegant weapon, for a more civilized age.

By my math, I went 29 for 31 on daily posts last month. I was hoping to run the table after a long period of infrequent, intermittent posting. There’s a certain symmetry in how I screwed up right at the beginning of the month and right at the end of the month, but 20+ days in between is not too bad.

Speaking of streaks, 1 year ago I set about to listen to more music. The screen capture above, from the Streaks iOS app on my phone, emphasizes that I’ve managed to do that for 365 days straight. 🎊 🥳 🎉 Listening in this case means a minimum of 3 songs, but almost uniformly I got in a full “CD” if not multiples.

What always gets me about these types of streaks is overcoming those crappy days. Days when you fell wishy washy and think you might have COVID. Days when work runs long and you feel dead tired. Days when your kids get on your last nerve. Days when you feel like you let everyone down.

But a little music always soothes the soul, even if one falls asleep in the middle of the mix 🤣.

I have a wealth of starred items stashed away in my feedreaders, plural. Link parkin’ a few things of note. Only goal is to send you off to some pieces loosely joined. Not too much thought put into it.

Recently learned about reader, a minimalist Python module for managing collections of RSS (and other) feeds.

Mark Litwintschik does his usual thorough data spelunking against The Streets of Monaco

Simon Willison was a guest on architecture notes providing a great overview of datasette

Matt Webb talks about his blog writing approach. I use Feedbin much the same way he does. I wonder how many feeds I’m subscribed to?

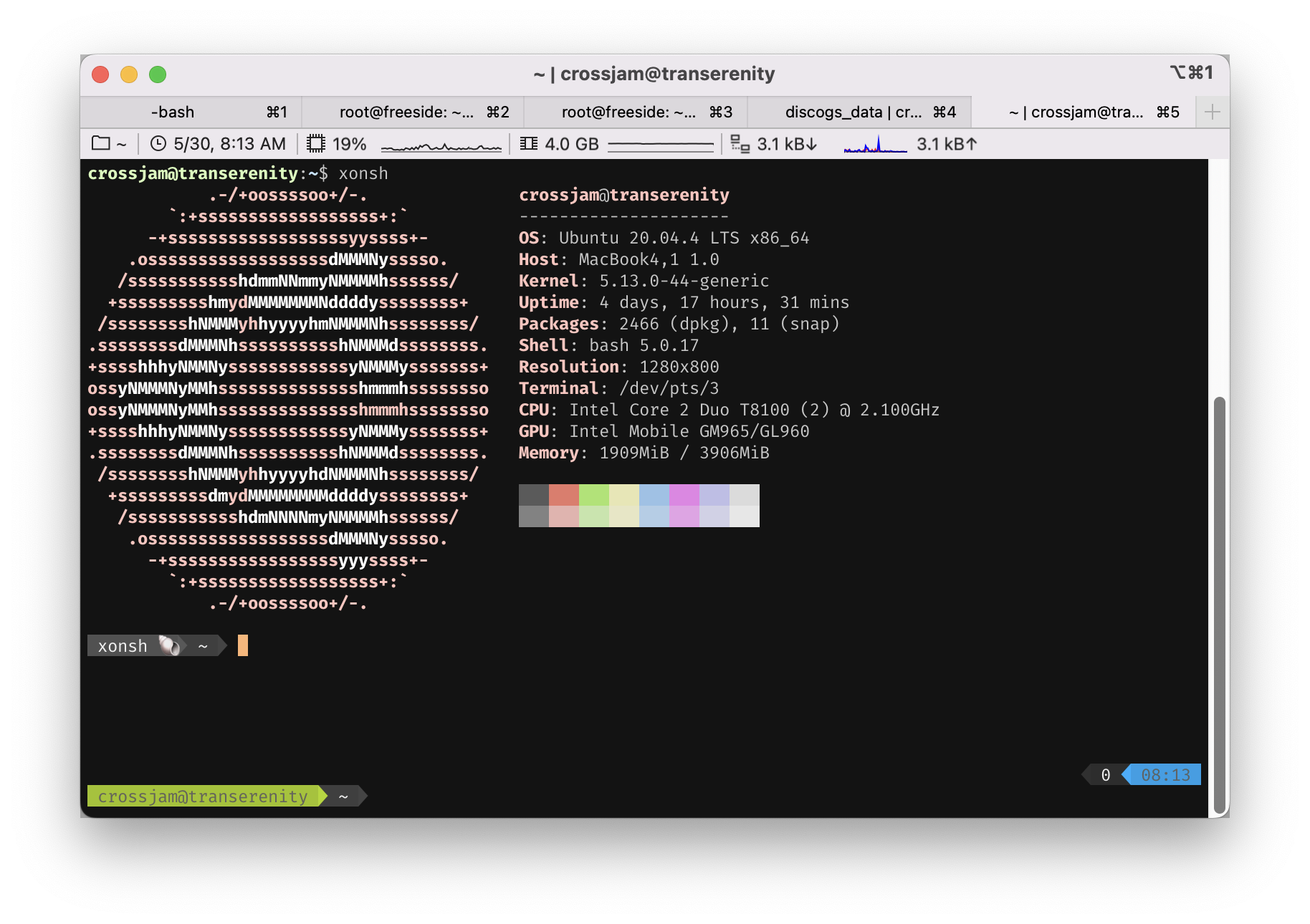

Yesterday I lost the plot by yak shaving my shell setup installation process to work better under Ubuntu. The above capture illustrates that lots of progress was made, but then I forgot to actually post. Oh well!

Peter Wang gave a keynote at PyCon US 2022. Apparently it excited a lot of people. The topic was PyScript, a project to put Python in the browser via WebAssembly.

PyScript is a framework that allows users to create rich Python applications in the browser using HTML’s interface and the power of Pyodide, WASM, and modern web technologies. The PyScript framework provides users at every experience level with access to an expressive, easy-to-learn programming language with countless applications.

I’d heard about the tool many times, but I just started using

pgcli to interact with PostgreSQL databases from the

command line. 🤯

pgcli is really good stuff. Auto-completion, syntax

highlighting, Emacs style key bindings, and ssh

tunneling, amongst many other great features. And

implemented in open source Python (BSD license) to boot. What’s

not to love?

Why did I wait so long? What the hell was I thinking?

Another circumvation circumnavigation about the center of solar

system completed.

By many measures, year-over-year I’m quite a bit healthier, wealthier, and wiser.

However…….

The Godfather is one of my favorite movies. The first spoken words are:

Gettin’ harder to maintain belief in these times.

We’ll just have to keep on keepin’ on.

Back to improving my Emacs environment. Since I conquered emojis in Emacs, now it’s time to deal with spelling since typos in my blog posts drive me up a wall.

I vaguely had a recollection about some *spell package for Emacs but

couldn’t remember the specifics. Turns out it was the built-in flyspell,

conveniently covered by Ryan Moore. Especially useful is the

keybinding for the mouse click on Macbook trackpads that let’s you see

variant spellings.

That led me to thinking about text expansion to help cut down on some

of my more frequent lapses (looking at you occasionally). And what

would an MPR Emacs post be without a mention of Mickey Petersen (go

buy another copy of Mastering Emacs). Emacs has a venerable

abbrev package and Petersen ably addresses its usage for spell

correction. Job done, but there’s bonus material on using

hippie-expand which does a whole lot of interesting dynamic abbreviation

expansion, a.k.a replacements based on text already existing in

buffers. If you don’t like hippies, he mentions at least three other

text expansion modules 😆.

One of the real reasons I need text expansion is one of my favorite emojis is this one, 😆, which is named, I kid you not

SMILING FACE WITH OPEN MOUTH AND TIGHTLY-CLOSED EYES

Sort of a pain to enter after C-x 8 RET even with tab completion.

Below are some podcast recommendations for those invested in the Python ecosystem.

- The Python Podcast.__init__

- Data Engineering Podcast

- Python Bytes

- Talk Python To Me

- PyBites Podcast

- The Real Python Podcast

- Software Engineering Radio

The first four were previously listed in my Favorite Podcasts 2022 list. The Python Podcast.__init__, Python Bytes, and Talk Python To Me are my gotos, in that rough priority order.

Strictly speaking the Data Engineering Podcast isn’t about Python, but there’s enough overlap with the ecosystem that a lot of Python pops up. The PyBites Podcast is mostly specific to participants in the PyBites community and platform. It’s a little more on the personal and professional development side than straight Python ecosystem. Oddly enough, even though I’m recommending it and I’m a paid subscriber on Real Python, I don’t often listen to episodes from The Real Python Podcast. But when I do, I’m highly satisfied.

I just threw in Software Engineering Radio because it hasn’t been mentioned in this blog yet, but it’s really good and has the occasional Python bits 😆.

Actually, Software Engineering Radio was previously mentioned in Deep Diving Podcasts. We regret the error

Link parkin’: SuperFastPython

Jason Brownlee seems super excited about Python concurrency mechanisms (threads/processes) and helping developers better understand them. A cursory scan of a few of his tutorials indicates a high level of quality and coverage.

I’ve been doing a bit of Python thread programming in the day job, so these topics are of interest.

© 2008-2025 C. Ross Jam.

Licensed under CC

BY-NC-SA

4.0 Built using Pelican. Theme based upon Giulio

Fidente’s original svbhack,

and slightly

modified

by crossjam.