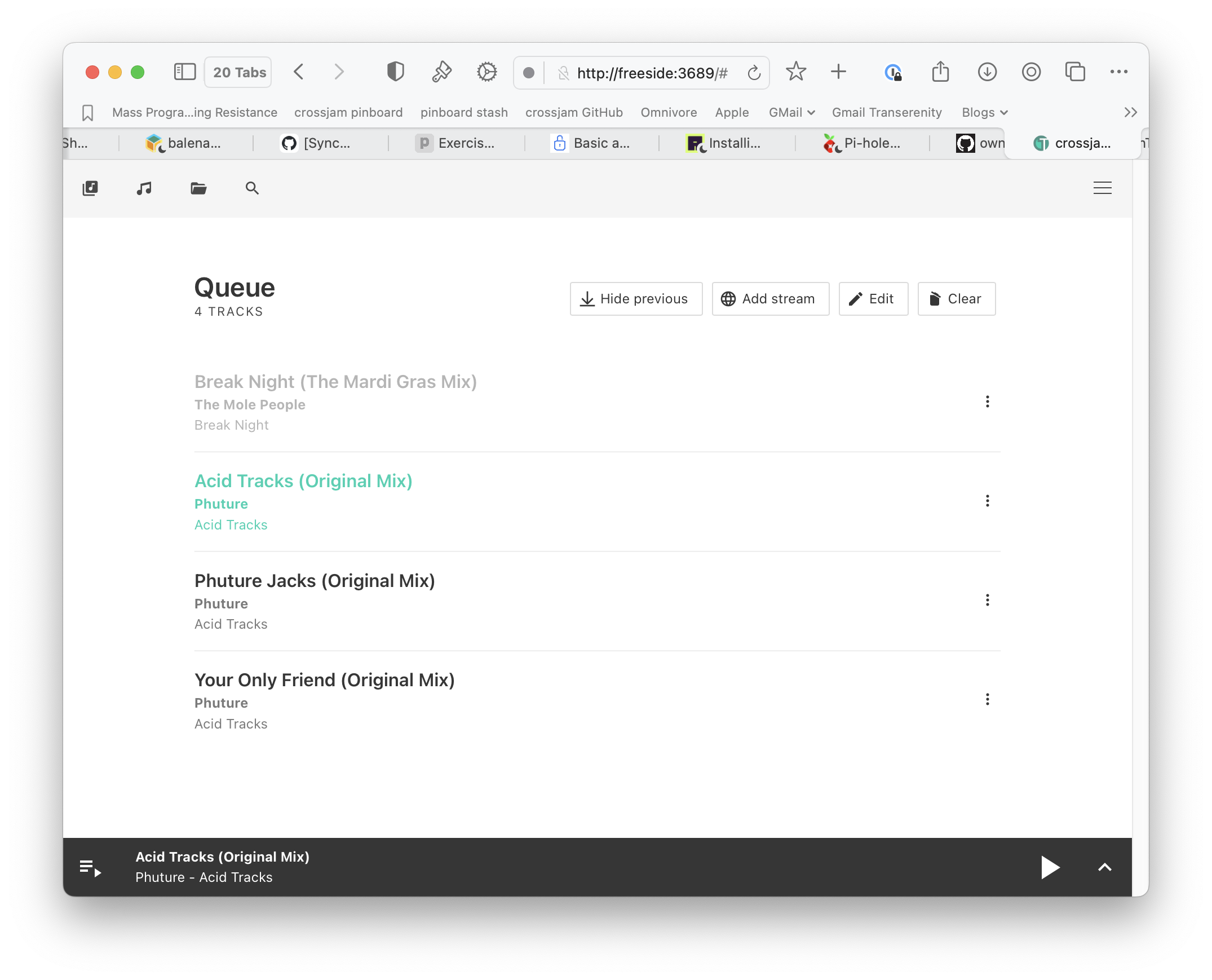

I’ve recently fallen down a rabbit hole of adding DJ mix sets to my

Apple Music library. Boiler Room and Fabric have been on the

radar now for a year or two, with solid presences in the Apple Music

service. For whatever reason, one day recently I started chasing the

“More By …” and “You Might Also Like…” connections underneath a

set’s tracklist and discovered:

- The Warehouse Project and

- Glitterbox and

- Tomorrowland and

- Defected Broadcasting and

- The Lost Village and

- Movement and

- you get the picture …

The nice thing is that I’m coming across new to me DJs like Carlita,

TSHA, Seth Troxler, Skream, Chase & Status, Sonny Fodera, Denis Sulta,

and Kilimanjaro. Meantime there’s a heaping helping of old friends

with mix sets I’ve never heard before like Josh Wink, Todd Terry, MK,

Roger Sanchez, Armand van Helden, Lil’ Louie Vega, Masters at Work,

Marshall Jefferson and Basement Jaxx.

Some of these sets are bananas in terms of length, including a 5 hour

ride with Seth Troxler B2B Skream.

Out of curiosity I asked the major AI bots their opinion to compare

and contrast Spotify with Apple Music on this front. Surely Spotify

must be in the arena here? Apparently that’s not really the

case. Here’s what Claude had to say:

Bottom Line

Apple Music is the clear winner for electronic DJ mixes, offering:

- Professional-grade content: Thousands of expertly crafted

continuous DJ mixes

- Industry partnerships: Direct integration with major DJ

software and hardware

- Regular updates: Monthly curated mixes and exclusive content

from top DJs

- Variety: Comprehensive coverage of electronic music genres

Spotify falls short with mainly user-generated playlist collections

rather than true DJ mixes, limited professional content, and no

current support for DJ software integration.

For serious electronic music fans and DJs, Apple Music provides a

significantly richer and more varied selection of actual DJ mixes

compared to Spotify’s more basic offerings.

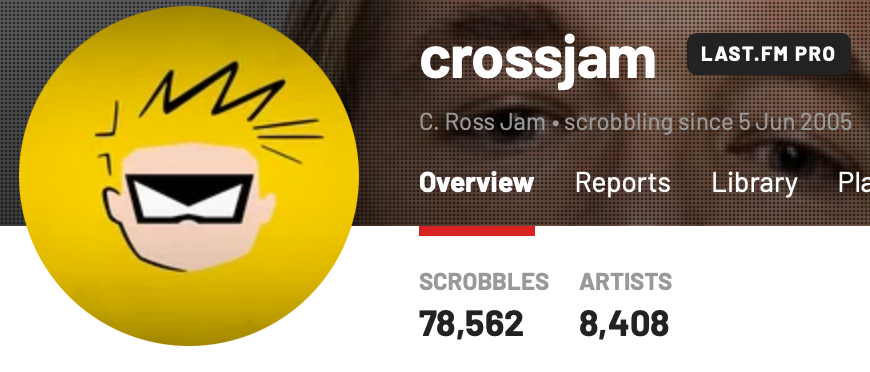

I need to do some further work to verify they’re all not hallucinating

but this matches my general vibe. Full disclosure, I ditched a Spotify

premium subscription a few years back since I got sucked into the

Apple One bundle.

What really grabs me about Apple Music is the relation with curators,

promoters, and labels such as The Warehouse Project, Boiler Room,

Fabric, and Defected. Plus they have their own series Beats in Space

and provide dj mix themed genres, although you have to dig a

little. This provides some serendipitous discovery without needing to

go completely algorithmic. Great folks like Boiler Room just putting

up phenomenal events on Apple Music and the archives live on in

perpetuity for someone like me to fall into.

Have to acknowledge the creator focused platforms Mixcloud and

SoundCloud. They’re both great sources for sets directly from

DJs. Bonus, they integrate nicely with the Sonos platform. They just

don’t provide personal library of tracks that Apple Music does. He

said grudgingly.

Now if only Apple would do something about their janky desktop app.